The Architects of Data The Role of a Data Engineer

A Data Engineer is a specialized software engineer who designs, builds, and manages the systems that collect, store, and transform raw data into a clean, reliable, and usable format. They are the architects of an organization's data infrastructure, creating the robust data pipelines and warehouses that are the foundation for all data science and analytics.

Hiring a Data Engineer is a foundational investment in becoming a data-driven organization. They are responsible for the "plumbing" of the data world, ensuring that data analysts and machine learning engineers have a steady and trustworthy supply of high-quality data. Without their work, any advanced analytics or AI initiative is doomed to fail.

Expertise in ETL and Data Pipelines

The core responsibility of a Data Engineer is to build and maintain ETL (Extract, Transform, Load) or ELT pipelines. A proficient candidate must have deep, hands-on experience in designing these pipelines to move data from various source systems (like application databases, logs, and third-party APIs) into a centralized data warehouse or data lake.

They must be skilled with a workflow orchestration tool like Apache Airflow to schedule, monitor, and manage these complex data flows. The ability to write a reliable, efficient, and idempotent data pipeline is the most fundamental and critical skill for any data engineering role.

Strong Programming and SQL Skills

Data engineers are, first and foremost, strong software engineers. They must have expert-level proficiency in a programming language commonly used for data processing, with Python being the undisputed industry standard due to its rich ecosystem of data manipulation libraries. Familiarity with Scala or Java is also valuable, especially in the big data ecosystem.

Furthermore, a deep and practical mastery of SQL is absolutely essential. They need to be able to write complex, performant queries to transform and aggregate data within the data warehouse. An engineer who can write an optimized window function like ROW_NUMBER() OVER (PARTITION BY ... ORDER BY ...) is an engineer who truly understands data manipulation.

Data Warehousing and Data Modeling

A Data Engineer is responsible for the design and maintenance of the central repository of an organization's data: the data warehouse. They must have a strong understanding of data warehousing concepts and be proficient with a modern cloud data warehouse like Snowflake, Google BigQuery, or Amazon Redshift.

A key skill is data modeling. They need to be able to design a warehouse schema that is optimized for analytical queries. This requires a solid understanding of data modeling techniques, such as the Kimball methodology, and the ability to design logical and efficient star schemas with fact and dimension tables.

Big Data Technologies

For organizations that deal with massive volumes of data, expertise in the big data ecosystem is a critical requirement. A candidate should have hands-on experience with distributed computing frameworks, with Apache Spark being the most important and widely used tool for large-scale data processing.

They should be able to write Spark jobs in Python (PySpark) or Scala to process terabytes of data in a distributed and parallel manner. Familiarity with other parts of the Hadoop ecosystem, like HDFS for distributed storage and Hive for data warehousing on top of Hadoop, is also valuable.

Cloud and Infrastructure Knowledge

Modern data engineering is almost exclusively done in the cloud. A Data Engineer must have strong, practical experience with a major cloud provider, such as AWS, GCP, or Azure. They need to be proficient with the core data services offered by their chosen platform.

This includes expertise in services for data storage (like S3 or Google Cloud Storage), data warehousing (BigQuery, Redshift), and managed data pipeline services. They should also be comfortable with the underlying infrastructure, including virtual machines, networking, and security, as their pipelines run on top of this foundation.

Data Quality and Governance

The goal of a data engineer is not just to move data, but to deliver trustworthy data. A top-tier candidate will have a strong focus on data quality and governance. They must be able to implement automated checks and validation steps within their pipelines to ensure the data is accurate, complete, and consistent.

They should also be familiar with data governance concepts, such as creating a data catalog to document data sources and definitions, and implementing access controls to ensure that data is used securely and appropriately. This commitment to quality is what transforms a data swamp into a reliable source of truth.

Streaming Data and Real-Time Processing

While batch processing is still common, the need for real-time data is growing rapidly. A forward-thinking Data Engineer should have experience with streaming data technologies. This requires proficiency with a message broker like Apache Kafka for ingesting high-throughput data streams.

They should also have experience with a stream processing framework like Apache Flink or Spark Streaming. The ability to build a pipeline that can process and analyze data in real time as it arrives is a highly valuable and in-demand skill for building modern, event-driven applications.

DevOps and Infrastructure as Code

Data engineering infrastructure, like any other software infrastructure, should be managed with modern DevOps practices. A candidate should be comfortable with Infrastructure as Code (IaC) tools like Terraform to provision and manage their cloud resources in a repeatable and version-controlled way.

They also need to be skilled at using containerization with Docker to package their data processing applications and be familiar with CI/CD principles for automating the testing and deployment of their data pipelines. This "DataOps" mindset is crucial for building a scalable and professional data organization.

Version Control and Collaboration

Data pipelines are code, and they must be managed with the same discipline as any other software project. A Data Engineer must be an expert with Git and a platform like GitHub or GitLab. They need to be able to version control their pipeline code, SQL transformations, and infrastructure definitions.

A strong commitment to code reviews and a collaborative development workflow is essential. Data engineering is a team sport, and a developer who can work effectively with other engineers, analysts, and data scientists is a key contributor to a successful data team.

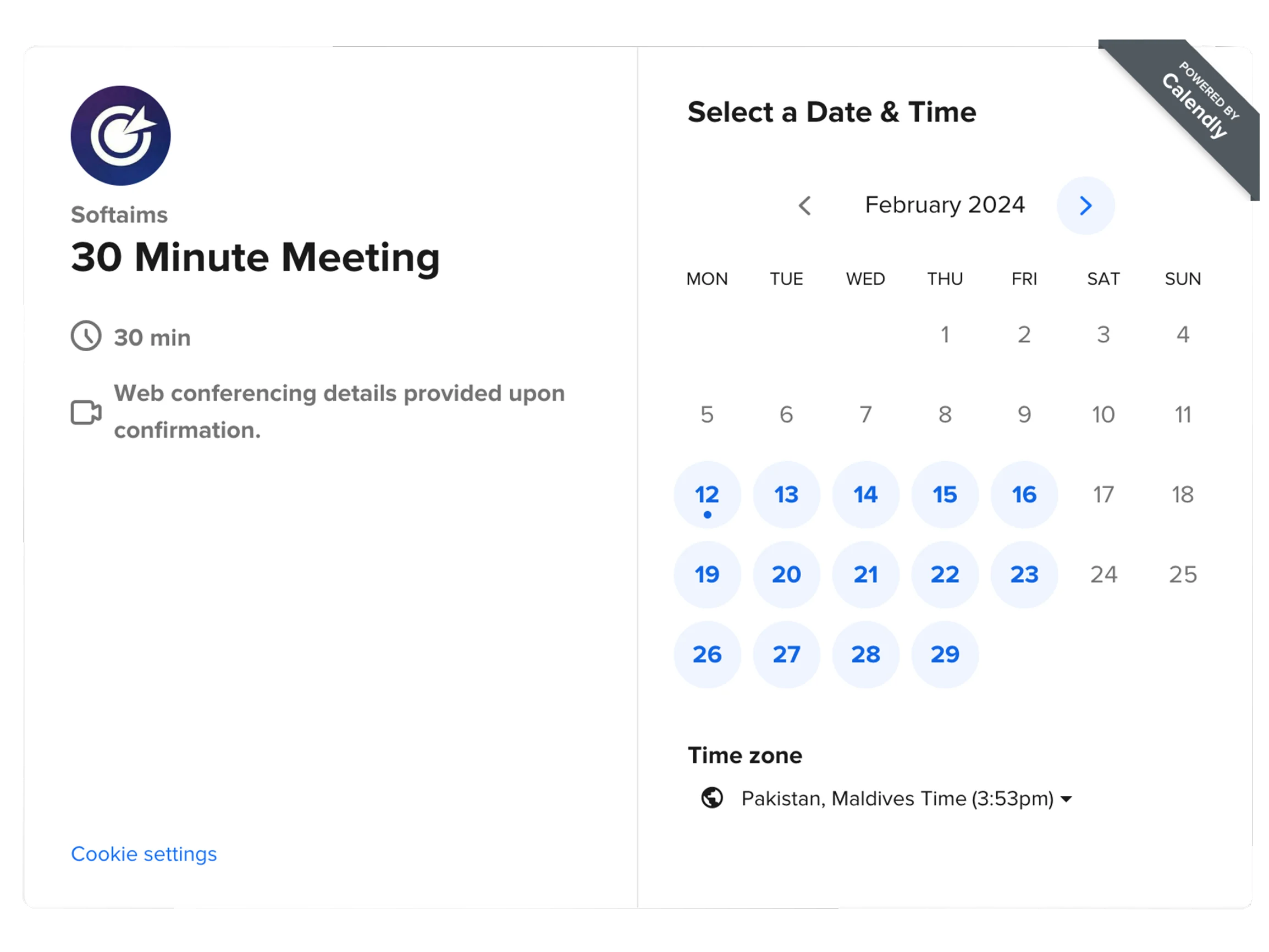

How Much Does It Cost to Hire a Data Engineer

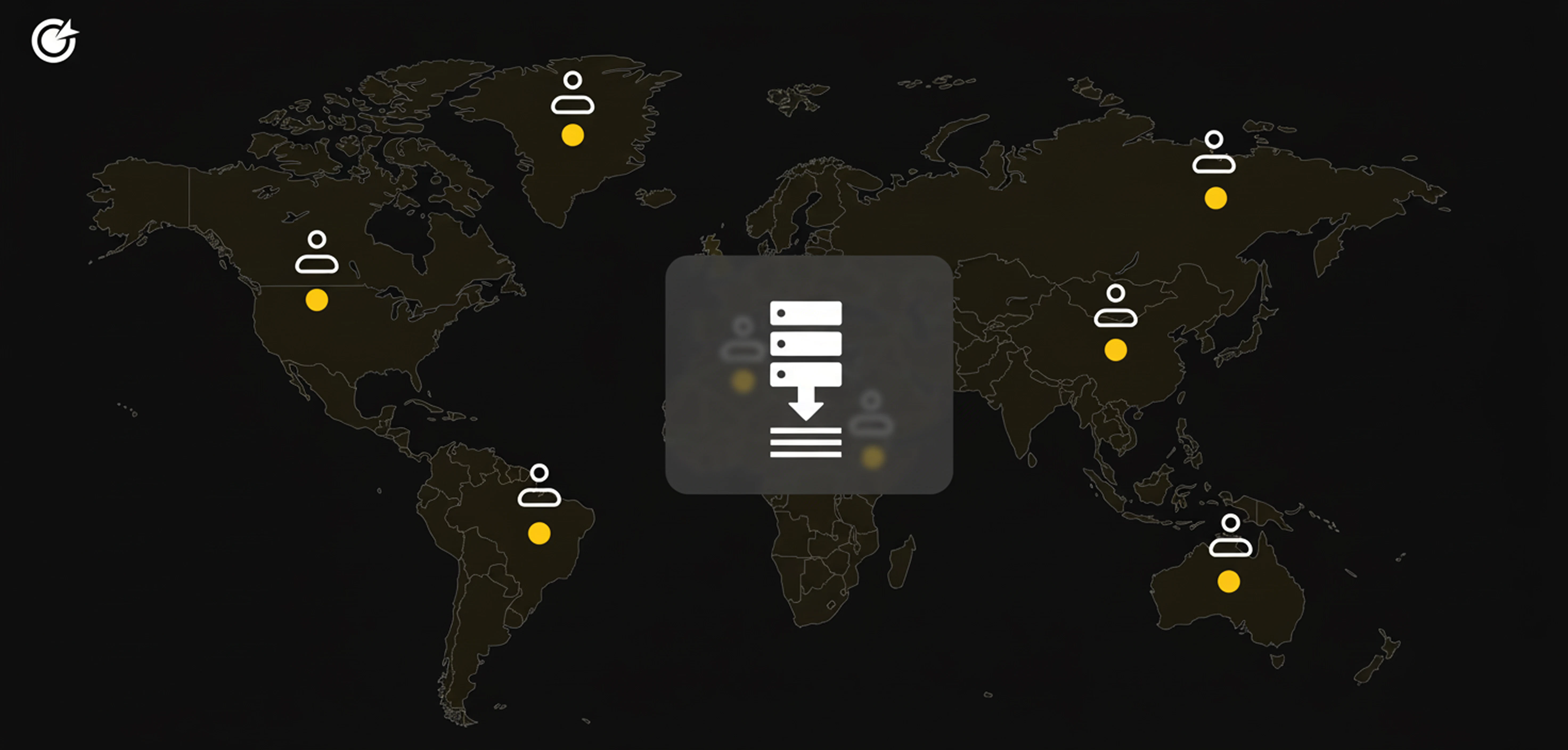

The cost to hire a Data Engineer is high, reflecting their critical role as the foundation of any data-driven company and the intense demand for their specialized skills. The salary is heavily influenced by their geographic location, years of experience, and their expertise in high-demand technologies like Spark, Airflow, and cloud data warehouses.

Tech hubs in North America and Western Europe typically lead the world in salary expectations. The following table provides an estimated average annual salary for a mid-level Data Engineer to illustrate these global differences.

| Country |

Average Annual Salary (USD) |

| United States |

$145,000 |

| Switzerland |

$135,000 |

| United Kingdom |

$95,000 |

| Germany |

$92,000 |

| Canada |

$115,000 |

| Poland |

$70,000 |

| Ukraine |

$68,000 |

| India |

$50,000 |

| Brazil |

$60,000 |

| Australia |

$118,000 |

When to Hire Dedicated Data Engineers Versus Freelance Data Engineers

Hiring a dedicated, full-time Data Engineer is the right choice when you are building the core data infrastructure for your company. This is a foundational, long-term role that requires deep, ongoing ownership of the data pipelines, warehouse, and overall architecture. A dedicated engineer is essential for any company that is serious about becoming data-driven.

Hiring a freelance Data Engineer is a more tactical decision, perfect for specific, well-defined projects. This is an excellent model for building a single data pipeline from a new source, migrating an existing ETL process to a new technology, or getting expert help to set up an initial data warehouse. Freelancers can provide specialized expertise to get a project done efficiently.

Why Do Companies Hire Data Engineers

Companies hire Data Engineers to build the single source of truth for their business. In today's world, data is generated from a multitude of disconnected systems, and a data engineer's primary job is to collect all of this raw, messy data and transform it into a clean, centralized, and reliable resource that the entire organization can trust and use for decision-making.

Ultimately, data engineers are hired because they enable all other data roles to be effective. Without the clean, reliable data pipelines and warehouses that data engineers build, data analysts cannot create accurate reports, and machine learning engineers cannot train effective models. They are the critical first step in unlocking the immense value that is hidden within an organization's data.

In conclusion, hiring a top-tier Data Engineer requires finding a candidate who is a unique combination of a skilled software engineer, a database architect, and a systems thinker. The ideal professional will combine mastery of Python, SQL, and big data technologies with a practical, hands-on approach to building and managing a modern, cloud-based data stack. By prioritizing these skills, organizations can build the powerful and reliable data infrastructure that is the essential foundation for any successful data, analytics, or AI strategy.